I’ve always wondered if film critics are biased towards certain types of movies, so I thought I would take a look at what the data can tell us. I looked that the 100 highest grossing films from each of the past 20 years, which gave me 2,000 films to study. I then cross referenced data from IMDb’s user votes, Rotten Tomatoes’ audience percentage, Metacritic’s Metascore and Rotten Tomatoes’ Tomatometer. In summary…

I’ve always wondered if film critics are biased towards certain types of movies, so I thought I would take a look at what the data can tell us. I looked that the 100 highest grossing films from each of the past 20 years, which gave me 2,000 films to study. I then cross referenced data from IMDb’s user votes, Rotten Tomatoes’ audience percentage, Metacritic’s Metascore and Rotten Tomatoes’ Tomatometer. In summary…

- Film critics and audiences do rate films differently

- Film critics are tougher and give a broader range of scores

- Film critics and audiences disagree most on horror, romantic comedies and thrillers.

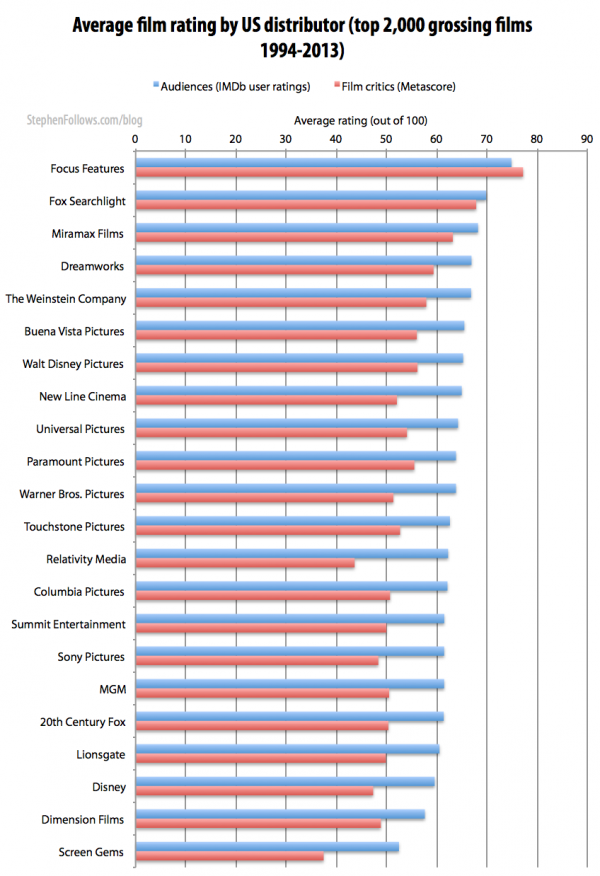

- Audiences and film critics gave the highest ratings to films that were distributed by Focus Features.

- Film critics really do not like films distributed by Screen Gems

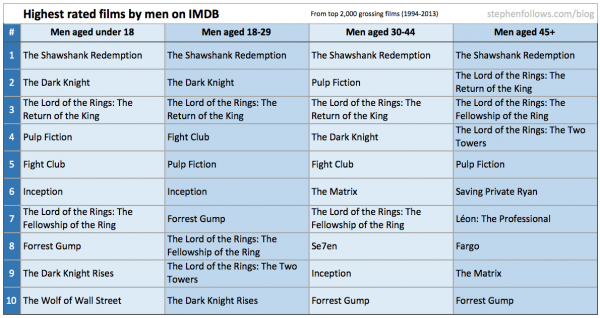

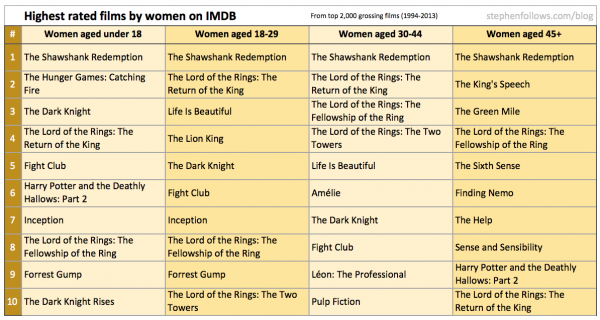

- The Shawshank Redemption is the highest rated film in every audience demographic

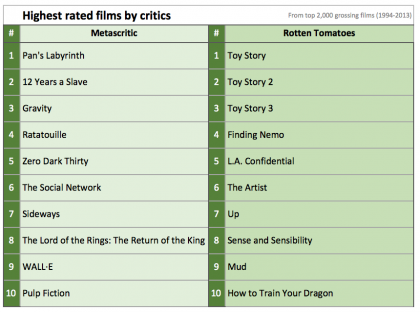

- Film critics rated 124 other films higher than The Shawshank Redemption

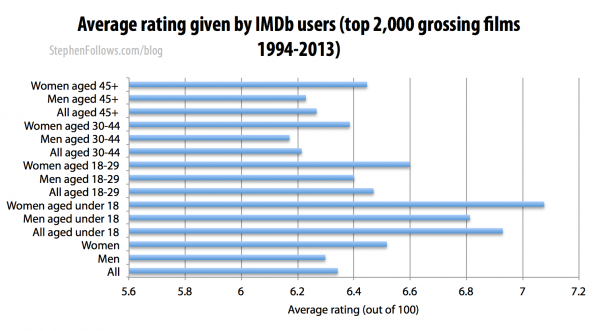

- Female audience members tend to give films higher scores then male audiences

- Older audience members give films much lower ratings than younger audiences

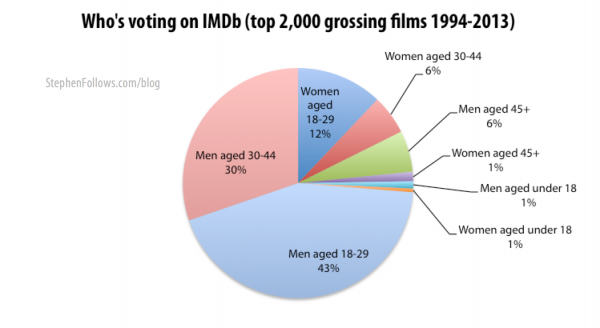

- Rotten Tomatoes seems to have a higher proportion of women voting than IMDb’s 1 in 5 ratio of women to men.

- IMDb seems to be staffed by men aged between 18 to 29 who dislike Black Comedies and love the 1995 classic ‘Showgirls’.

Do film critics and audiences rate in a similar way?

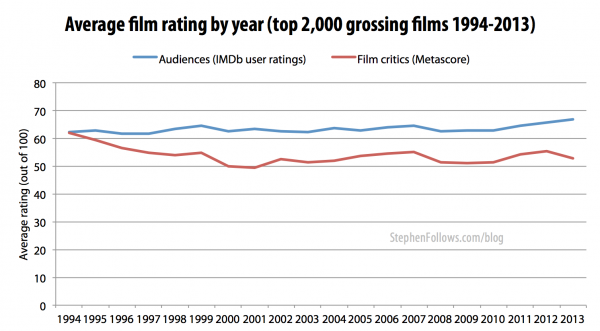

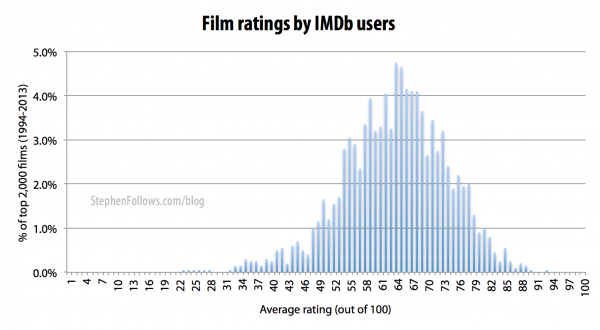

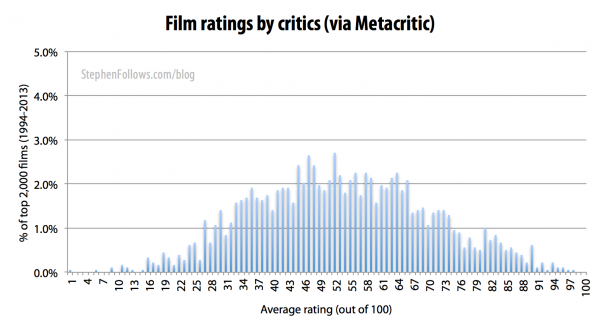

No. Film critics’ and audiences’ voting patterns differ in two significant ways. Firstly, critics give harsher judgements than audience members. Across all films in my sample, film critics rated films an average of 10 points lower than audiences.  Secondly, film critics also give a wider spread of ratings than audiences. On IMDb, half of all films were rated between 5.7 and 7.0 (out of 10) whereas half of all films on Metacritic were rated between 41 and 65 (out of 100).

Secondly, film critics also give a wider spread of ratings than audiences. On IMDb, half of all films were rated between 5.7 and 7.0 (out of 10) whereas half of all films on Metacritic were rated between 41 and 65 (out of 100).

This pattern was repeated on Rotten Tomatoes. Unlike IMDb and Metacritic, Rotten Tomatoes measures the number of people / critics who give the film a positive review. Half of all films received an audience rating of between 47% and 76%, compared with 28% and 73% by film critics.

This pattern was repeated on Rotten Tomatoes. Unlike IMDb and Metacritic, Rotten Tomatoes measures the number of people / critics who give the film a positive review. Half of all films received an audience rating of between 47% and 76%, compared with 28% and 73% by film critics.

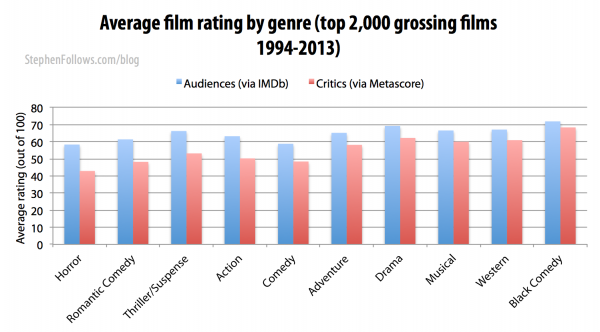

Which genres divide film critics and audiences?

The difference between the ratings given by film critics and audiences is most pronounced within genres that are often seen as populist ‘popcorn’ fare, such as horror, romantic comedy and thrillers. Both film critics and audiences give the highest ratings to black comedies and dramas.

Do audiences and film critics share the same favourite films?

No. All segments of audiences put The Shawshank Redemption as their top film but it doesn’t even make it into the film critics’ top ten (it’s at #125 out of the 2,000 films I investigated).

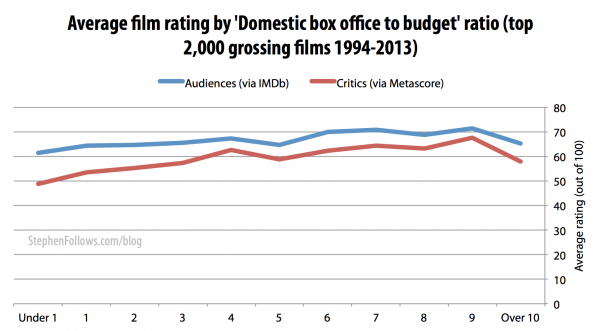

Do the most financially successful films get the highest ratings?

Yes. I charted film ratings against the ratio of North American Box Office gross divided by the production budget (as I feel this gives a fairer representation of a film’s performance than just the raw amount of money it grossed).

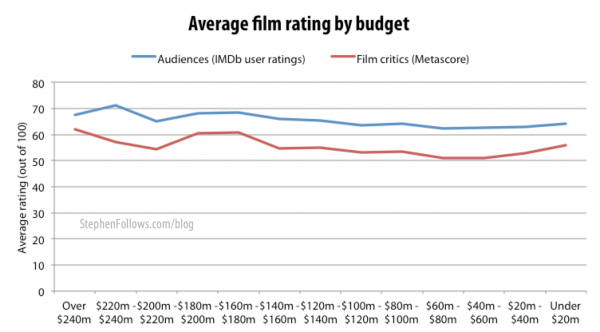

Do bigger budgets mean higher ratings?

Yes, both audiences and film critics prefer films with bigger budgets.

Do film critics and audiences agree which companies distribute the ‘best’ films?

Yes. Films distributed by certain companies consistently get the best reviews. This is not hugely surprising as we have already seen how film critics favour certain types of films and distribution companies tend to focus on certain types of films. Looking at company credits in all four scoring systems, Focus Features took the top spot, with Fox Searchlight second and Miramax coming in a close third.

Do critics and audiences agree which companies distribute the ‘worst’ films?

Yes, although there is less consensus than for the ‘best’ films. Films distributed by Screen Gems received the lowest scores on IMDb, Metacritic and the Tomatometer (they were second worst for audiences on Rotten Tomatoes).

Which distributors divide audiences and film critics?

Although the league tables of companies listed above are pretty similar, audiences and critics disagree about how good the ‘best’ are and how bad the ‘worst’ are. Film critics absolutely love films from Focus Features, to the extent that Focus is the only company whose films score higher with critics than with audiences.

Although the league tables of companies listed above are pretty similar, audiences and critics disagree about how good the ‘best’ are and how bad the ‘worst’ are. Film critics absolutely love films from Focus Features, to the extent that Focus is the only company whose films score higher with critics than with audiences.

Films distributed by Screen Gems, Relativity Media and New Line Cinema tend to get much poorer scores from critics than they do from audiences. Films by Relativity Media average 62/100 from audiences on IMDb whereas film critics only give them 44/100 on average.

Similarly, on Rotten Tomatoes, films by Screen Gems received positive reviews from 54% of audiences and only 28% of film critics.

Who is voting on IMDb?

Across my 2,000 films, I tracked over 174 million votes. Yup, that’s a lot! However, those votes are not evenly spread. For starters 80% of votes were cast by men. Also, 54% of all votes are from people between the ages of 18 and 29.

Rotten Tomatoes appears to be better than IMDb at representing women

Rotten Tomatoes does not offer a split by gender. However, by comparing the gender breakdown on IMDb votes we can speculate that they have a more equal gender split than IMDb’s figure of 80% of votes coming from men. My deduction is due mostly to there being many female-skewed films in the Rotten Tomatoes audience top 20, including Life Is Beautiful, The Pianist and Amelie (see earlier in this article for female Top 10 films).

Rotten Tomatoes does not offer a split by gender. However, by comparing the gender breakdown on IMDb votes we can speculate that they have a more equal gender split than IMDb’s figure of 80% of votes coming from men. My deduction is due mostly to there being many female-skewed films in the Rotten Tomatoes audience top 20, including Life Is Beautiful, The Pianist and Amelie (see earlier in this article for female Top 10 films).

Who works at IMDb?

In the breakdown of user votes on IMDb, they mention votes by IMDb staff. I wouldn’t worry about IMDb staff votes skewing the overall score as they accounted for only 0.01% of the total votes cast. But this let me have a quick bit of fun looking at what this information reveals about the kinds of people who work at IMDb.  The voting patterns indicate that IMDb staff are…

The voting patterns indicate that IMDb staff are…

- Mostly male, aged between 18 and 29 years old

- Enjoy horror films and dislike Black Comedies more than most IMDb users

- They drink the Hollywood cool-aid, as shown by their disproportionate love of bigger budgets and movie spin offs.

- They liked Showgirls a lot more than the average user

If anyone from IMDb is reading this, please can you do a quick straw poll in the office and let me know how far off I am? First one who does gets a limited edition Blu-Ray of Showgirls (as a back-up for the one you already own).

Methodology – The scoring systems I studied

I used four scoring systems to get a sense of how much “the public” and “the film critics” liked each film. In the blue corner, representing “The Public” we have…

IMDb user rating (out of 10) – IMDb users rate films out of 10 (the one exception being This Is Spinal Tap, where the rating is out of 11). IMDb displays the weighted average score at the top of each page, and provides a more detailed breakdown on the ‘User Ratings’ page. This outlines in detail how different groups rated that film, including by gender, age group and IMDb staff members.

IMDb user rating (out of 10) – IMDb users rate films out of 10 (the one exception being This Is Spinal Tap, where the rating is out of 11). IMDb displays the weighted average score at the top of each page, and provides a more detailed breakdown on the ‘User Ratings’ page. This outlines in detail how different groups rated that film, including by gender, age group and IMDb staff members.- Rotten Tomatoes audience score (a percentage) – The percentage of users who gave the film a positive score (at least 3.5 out of 5) on Rotten Tomatoes and Flixster websites.

And in the red corner, defending the honour of film critics everywhere, are…

Metacritic’s Metascore (out of 100) – Metacritic aggregates the scores given by a number of selected film critics and provides a weighted average. There is a breakdown of their methodology here.

Metacritic’s Metascore (out of 100) – Metacritic aggregates the scores given by a number of selected film critics and provides a weighted average. There is a breakdown of their methodology here.- Rotten Tomatoes’ Tomatometer – (a percentage) – The percentage of selected film critics who gave the film a positive review.

Comparing the different scoring systems

Both of the Rotten Tomatoes scoring systems look at the percentage of people who gave it a positive review, whereas IMDb and Metacritic are more interested in weighted averages of all the actual scores. This led me to wonder: are the ‘public’ and two ‘critics’ systems interchangeable? No. On the surface they seem similar but in the details they differ wildly.

- Audience: IMDb versus Rotten Tomatoes – The average IMDb user score equates to 63/100 and the average Rotten Tomatoes score is 61/100. These are quite close, but in the details they are quite different. For example, when looking at horror films, on IMDb they average 58/100 whereas on Rotten Tomatoes it’s 49/100.

- Film Critics: Metacritic versus Tomatometer – The rival film critics’ scoring websites are in a similar situation, with close overall averages (53/100 on Metacritic and 51/100 on Tomatometer) but wildly different scores under the surface. (The average ‘Unrated’ film received 59/100 at Metacritic but just 35/100 on the Tomatometer).

Each system is valid but they should not be compared with one another.

Limitations

There are a number of limitations to this research, including…

- Reliance on third parties -The data for today’s article came from a number of sources. I used IMDb, Rotten Tomatoes, Metacritic, Opus and The Numbers. This means that all of my data has been filtered to some degree by a third party, rather than being collected directly from source by me.

- Films selected via Domestic BO – The 2,000 films I studied were the highest grossing at the domestic box office. This was because international box office figures become more unreliable as we look further back in time and at films lower down the box office chart.

- Selection bias – This is at almost every level; only certain types of users vote online, only certain film critics are included in the aggregated film critics’ scores (some are also given added weighting by Rotten Tomatoes and Metacritic) and I also manually excluded films with an extremely small number of votes (under 100 for user votes and under 10 for critics).

- Companies – The film industry involves a large number of specialised companies working together to bring films to audiences. There may be a few production companies, backed by a Hollywood studio who then pass the film on to a different distributor in each country and sometimes different distributors for different media (i.e cinema, DVD, TV and VOD rights). In the ‘companies’ section of this research I chose to focus on US distributors who had distributed at least 10 films. Given unlimited resources, I would have preferred to study the originating production company as I feel they have a larger impact on the quality of the final film. Secondly, some films were released through joint arrangements between two distribution companies. I excluded these films from the company results I don’t have the knowledge to be able to judge the principal company in each case. They accounted for a small number of films and so wouldn’t massively skew the results. Finally, some companies have been bought out by others and some have been closed down. I have used the names of the companies at the point the film was distributed, meaning that Miramax makes an appearance, as do both USA Films and Focus Features, despite the fact they are now the same company.

Epilogue

This is yet another topic where I got a bit carried away. It was fun to get a window into the tastes of different groups and to spot patterns. I have a second part to this research, which I shall share in the coming weeks. If you have a question you want me to answer with film data then please contact me. And if you work for IMDb – I’m serious about the Showgirls Blu-ray.

Comments

Interesting findings, proving few things people have been guessing (audience vs.critics, budget vs. popularity and more)! Thanks, Stephen

Another fascinating survey but I didn’t follow the reference to Showgirls. Are you suggesting it was NOT a modern classic?

Are you now, or have you ever been, an employee of IMDb?

Jokes aside, I just thought it was a fun detail. I was looking at what films had the largest difference between the scores given IMDb staff and by the general public and Showgirls stood out.

Hi Stephen, it’s indeed a very thorough research but I really don’t find the point of it. I find be default quite wrong to “compare” critics and audiences because except that the second read the reviews of the first… there is nothing else that connects them. So the “numbers” and the statistics here show certain things but I personally can’t see how helpful they can be in understanding critics or audiences. Let me clarify what I mean.

(a) Critics have an “audience” of their own, their readers. A film critic on a certain newspaper, magazine, blog, has a reason of existence only when he has an audience. So their perspective is always biased by the fact that their audience should see a consistency in their opinions. You cannot like “Andrei Rublev” and “Transformers” the same… On the other hand film audiences have no audience of their own, so what they like or not has no “consequences” for them.

(b) Most film critics are educated people. They watch e.g. “Gravity” and they notice the long single-shot and the camera movements outside in space and inside the character’s helmet and all the camera angles and sound design around those scenes. So they approach film in a very thorough way, they observe every aspect of the making of it. On the other hand the audience mainly watches a suspenceful scene with two actors they like.

(c) Film critics watch movies and write about them because for most Cinema is an Art. For the most massive percentage of the global film audience though Cinema – or simply put “a movie” – is Entertainment. The moment a film critic and a normal audience guy enter the dark hall they have already made a different type of choice and set a different mindset, expectation etc. of what will follow.

I don’t disagree with your research as such, I find it helpful for sales agents and maybe studio executives. But I don’t believe it should be related at all (!) with what you state in your opening sentence as a question if film critics “are biased towards certain types of movies”. They’re not biased. And they don’t care if the production company is ScreenGems or FocusFeatures. They care only if it is a good film or a bad film based on their education on the Art of Cinema, taste and aesthetics.

George, you raise some really great points. Thank you for taking the time as it’s good to have those non-data issues included on this page for readers.

On the main point of your comment, I think you may have taken my single use of the word ‘bias’ to suggest that this article is trying to answer the question ‘Are film critics biased?’. It’s not – it’s looking at how audiences and critics’ ratings compare.

I don’t approach these topics with an agenda or try to prove a particular point. They start with an intriguing thought and then I see what the data can tell us about that issue. My starting point was wondering about how film critics and audience view films differently. My mention of bias at the start is simply to give context as to why I chose this topic for study. Within the detail of the article I pose specific questions and then try to answer them by sharing relevant data.

You’re right that we can’t prove bias in this context. There is no objective measure of a film’s quality with which to contrast the opinions (or ratings) of critics or audiences. Every measure of how ‘good’ a film is (critics’ ratings, audience ratings, box office, etc) can be steeped in a number of influencing factors (star power of it’s cast/crew, marketing, other options available, etc).

So my point with the companies was not to say that ‘critics are biased towards Focus Features and against Screen Gems’, but rather to show the patterns in the numbers. If you look at the data you can see that audiences also rank Focus Features at the top, so this would actually work against any argument that critics are biased.

I was very careful about my choice of language in order to only express what the data reveals.

If I’m honest, it would be my opinion that these higher scores have more to do with the aims and business plans of Focus Features than any kind of conspiracy by critics. I pointed out that film companies tend to have a ‘type’ of film they make/distribute.

The word ‘favour’ here is not an accusation of bias but a statement of fact. In the article I don’t speculating as to why they favour them, just observing that they do.

So overall I say that your points are entirely valid, and not inconsistent with the data shown in the article. It’s a very a complicated issue that certainly won’t be ‘solved’ with data alone.

Hi Stephen and thanks for your thorough reply! Apologies for my belated one now…

You explain well your motive of making this research and I understand it and will never say anything negative really about you doing so. It is a great work you did and a probably helpful research on different levels for many film professionals. And thank you for appreciating my comments and actually finding them consistent with the data shown here!

My quite personal disagreement with the “point” of the research though, as I put it before, is based on a completely different perspective I have on the general matter of what Cinema IS for a critic and what IS for the majority of its audience.

For example: you mention now that “every measure of how ‘good’ a film is (critics’ ratings, audience ratings, box office, etc) can be steeped in a number of influencing factors (star power of it’s cast/crew, marketing, other options available, etc).”

From my point of view “critics” shouldn’t be included at all in this sentence because they simply don’t care less about the star power of the cast/crew, marketing etc. (and I’m not talking about self-appointed critics/bloggers who haven’t watched any of the masterpieces of the early years of cinema from D.W.Griffith to Ozu and Jules Dassin – I’m talking about the ‘real’ educated film critics). I think that the only reference you might read critics make for cast/crew is something like “well acted/directed film by this and this person” or “what on earth did he/she were thinking and agreed to play/direct this bad movie?” As mentioned in my initial message, I believe that critics only care if they watch something that works well within its category/genre/ambition or not and instead it wasted their time.

Under the same perspective and since you point out that indeed film companies tend to have a “type” of film they make/distribute, then for me if critics (and even audiences) rate Focus Features films higher than those made by Screen Gems, then this mean that the content made by Focus Features is a lot more sophisticated and intellectual than the pure entertainment products the other companies make. Therefore… critics rate them a lot higher and recommend them.

So my point here is that all the data presented are truly great but when they reflect the “liking” translated into “rating” of two completely different “mindsets” and attitudes towards movies (critics vs. audiences) then I can’t consider them – always for myself speaking of course – that they can prove anything at any point because they are two completely different bunches put under the microscope.

As you very rightly say now, this is a very complicated issue that won’t be solved by data alone. 🙂

Anyhow, keep up the good work, I follow or your work for many months now and you have helped me a lot in my film business “thinking”. Much appreciation for this!

Interesting research, but I don’t agree with all.. From some movies I’ve seen (Say Man of Steel, Alpha and Omega, etc, etc), I have seen far different things from critics and audience..

Examples: Man of Steel was very successful, but about half the critics were nasty over it but the audience loved it most.

Alpha and Omega was also successful; it made enough money for sequels and there are many fans too.

I just noticed you said most in that part, so I’m not sure now.

But the one after that, that’s not the case because I know Man of Steel was very huge on budget.

Some of the other charts don’t 100% prove your “yes” either because it’s not exactly a match, but I can see lot’s of the times, they are close. But it may not be like that for every company, especially small ones.

I also like to say that many critics are biased against some films, which can be one heck of a problem, but then again, this article doesn’t talk about this I think.

very interesting. your data shows that it is not complicated. audience and critics tend to like the same things, but because movies are critics area of expertise, they are a bit hyper-critical. BTW, this is very important, because those overall negative reviews by critics can hurt a film. it makes a difference it a film gets a 45 vs 55 (that is a 10 pt difference that movies from rotten to fresh in RT). And I actually do check out the ratings. It will almost never deter me from seeing a movie that I was waiting to see (like Chef), but can make all the difference in a movie I was on the bubble about (like Maleficent).

Very interesting findings. I am researching the difference between critic and user reviews, so I am interested whether you made a scientific study about these findings or do you have any scientific sources?

Kind regards

Interesting read. Just a quick bit of speculation. I don’t think the RT audience voting patterns suggest more women are voting. Your own research shows that women vote films higher than men and RT’s system gives a percentage of voters that have given a score of 3.5/5 or higher. They don’t as far as I’m aware do any kind of weighting to take into account voter habits, so that means movies that women vote on at all are more likely to receive a higher rating simply because the average vote is more likely to be above the threshold for considering it liked. Personally I don’t like that system, especially given what you are saying about critics and viewer voting habbits (it seems the viewers should simply have a higher threshold for “like”, but in this circumstance perhaps it works well to fix the male bias from the balance of male/female votes.

There is of course a sizeable scholarly literature on the effect of film reviews on audience attendance and also the way that distributors seek to influence reviewers. A lot is known about audience segments that are affected by reviews and are not. I hope folks consult that literature as it is very valuable

Can I just add one thing … why do you people care what critics say … most of them are from one country and all people have different tastes why is there even a job for critic anymore given that most off the people who have access to the movie industry (cinemas, Netflix etc.) have access to a computer and internet. Can’t we all decide (the population) who consumes the product i.e. the movies decide if we like it or not who are this critics and why should I care what they like or dislike. This used to be a job but now it’s just a weird remnant of the internet-less past. Can’t we all agree that we disagree with almost all critic commentaries and just get on with our lives. From what I see critics (given that they are fewer than the rest of the consumers) could easily be corrupt and just give a more positive review two any product that is in they sphere of influence. (pardon my english i’m not a native speaker)