Are film critics losing sync with audiences?

A few weeks ago I was contacted by a journalist at the New York Times, who asked if I felt that film critics were losing touch with the tastes of the broader public. Naturally, I turned to the data to have a look at what's going on.

I built up a database of 10,449 movies released in US cinemas between 2000 and 2019 (more details in the Notes section at the end). I then gathered data on their Metascores (to measure average critical score, out of 100) and IMDb users scores (to measure of audience views, out of 10).

I think it will be useful to breakdown what I found into three smaller questions, and then address each in turn. They are:

Do critics and audiences have the same taste in movies?

How are the two sets of scores correlated?

If there's a shift over time, what could be causing it?

Do critics and audiences have the same taste in movies?

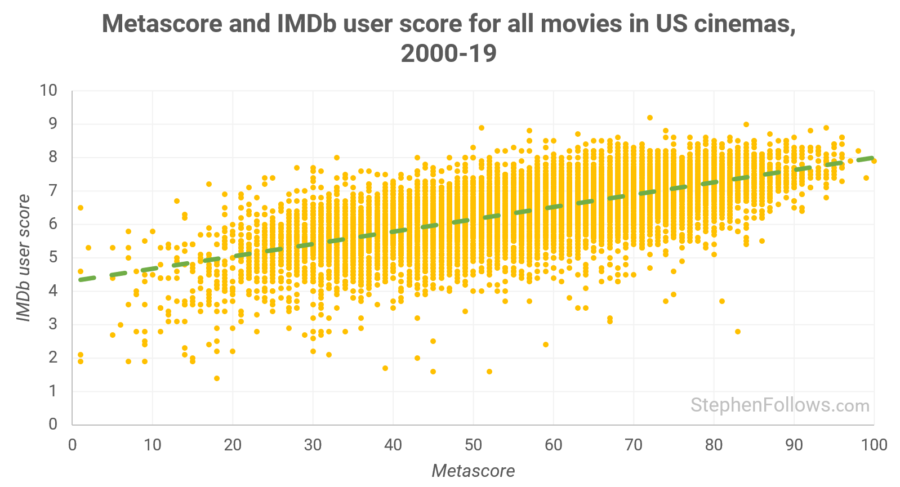

We need to start by looking at whether there is any link between the tastes of the two groups. Each yellow dot on the chart below is a movie in the dataset, displaying it's Metascore and IMDb user score. I've also added a green trendline to help show the overall pattern.

We can see that there is a very rough trend in which audiences and critics do broadly agree. That said, many films show deviation from the trendline, and it gets particularly noisy at the "bad" end (to the bottom left).

As a side note, it's interesting to see the agreement in the "good" quadrant (top right). It seems that the two groups agree most when they are talking about extremely good movies.

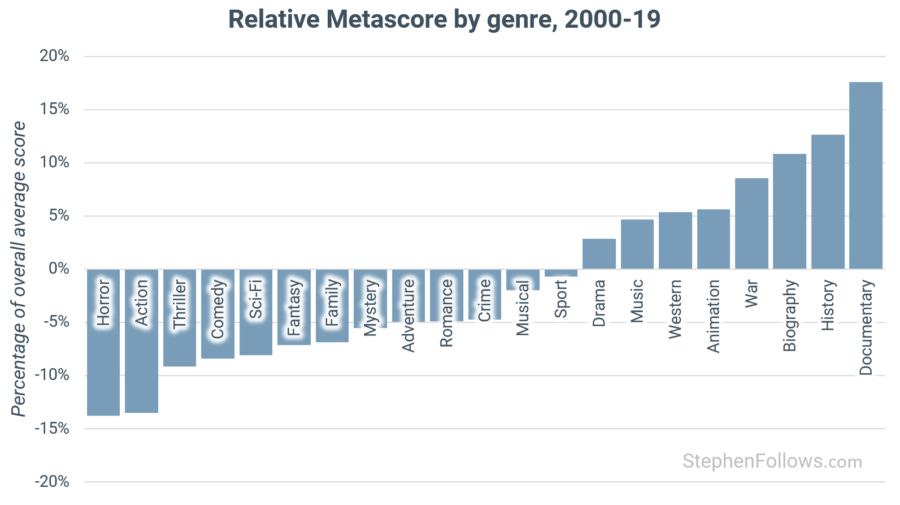

Next, let's look at how each of the groups typically rates different genres. The chart below shows how critics rate each genre, expressed as a percentage of the overall average score. I.e. The average Metascore over this period was 58, so if a genre had an average of 63.8 then it would be shown as being 10% above average.

Documentaries, historical and biographical films are rated highest, with horror, action and thrillers the lowest.

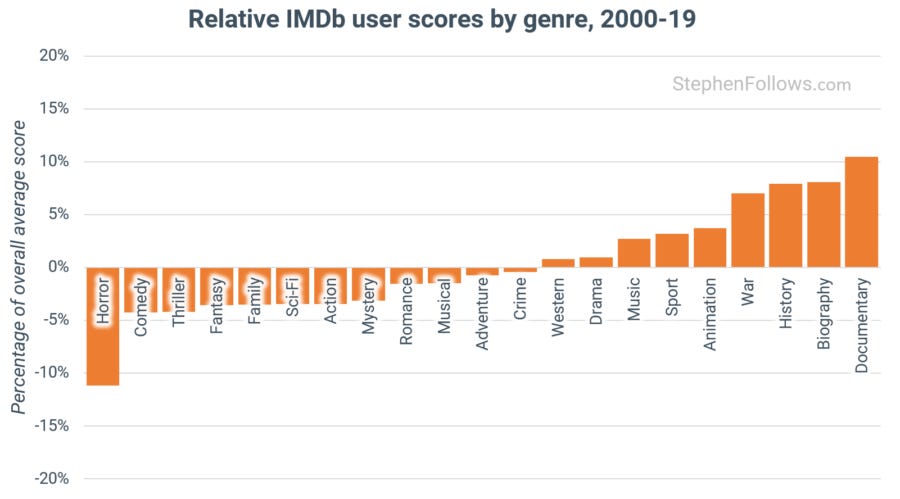

Using the same set of movies, we can use the same technique to look at how audiences on IMDb rate movies. We see very similar results; both groups had documentaries, historical and biographical films at the top and horror and thrillers at the bottom.

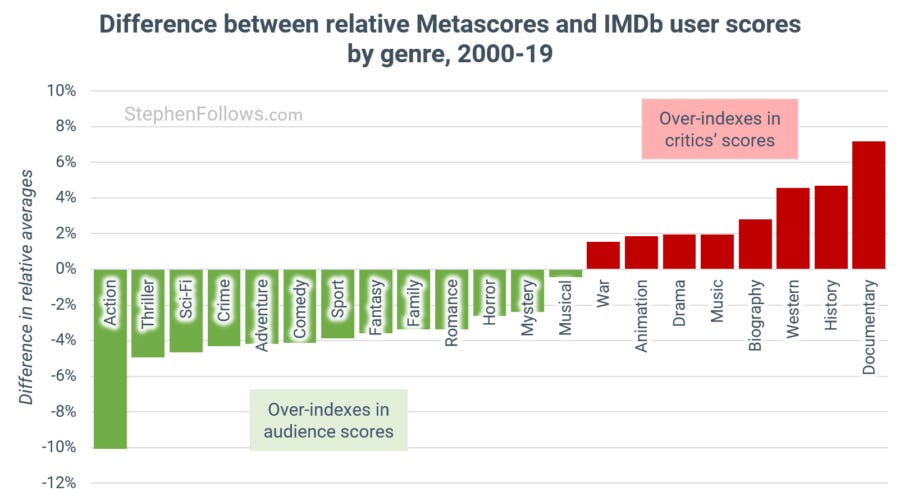

Overall, they are remarkably similar, albeit with a few differences. You may have spotted some of the areas of disagreement already but to make it even clearer, the chart below shows the same data but focuses on the difference between the two groups. You could see this as a measure of the difference between how much each group likes a genre. For example, while both groups rated documentaries the highest, critics did far more so than audiences.

This chart reveals that action, thriller and sci-fi movies could be called "crowd-pleasers" as they are preferred more by audiences than by critics. Conversely, westerns, historical films and documentaries are "critical darlings", with a skew towards pleasing critics.

How are the two sets of scores correlated?

Ok, so now we have an understanding of the tastes of the two groups, let's turn to measuring the extent to which their scores are correlated.

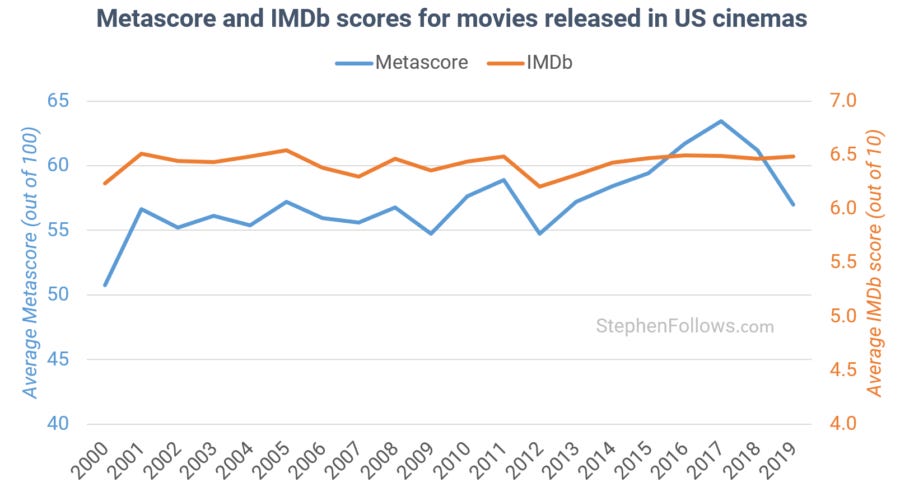

The chart below has two vertical axes, in order to allow us to plot both sets of scores. Which line is higher or lower than the other is irrelevant (as it's a consequence of the axis thresholds) but what is of interest is the extent to which they rise and fall together.

It reveals that while IMDb scores have been fairly static of the two decades, critical scores started to rise around 2012, falling again in 2017.

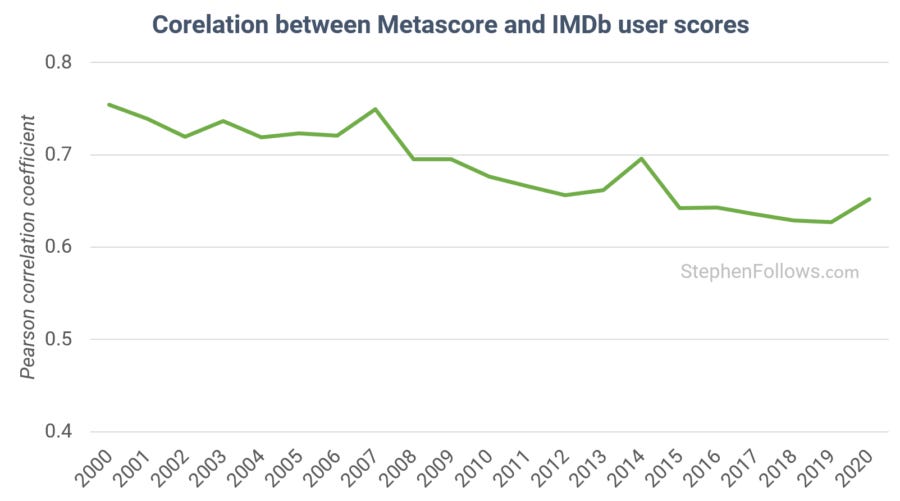

We can be more precise than just judging by eye. By using the Pearson coefficient we can measure the level of correlation, with zero meaning no correlation and one meaning perfect positive synchronisation.

This reveals three things:

There is a strong correlation between the average scores of critics and film audiences.

It was never complete synchronisation, which is the same story told in the previous section, looking at genre differences.

There has been a de-synchronisation taking place fairly consistently over the past two decades.

What is behind the de-synchronisation?

I dug a little deeper into the data to see if I could identify what's caused this de-synchronisation. I found a couple of key factors that explains at least some of this shift.

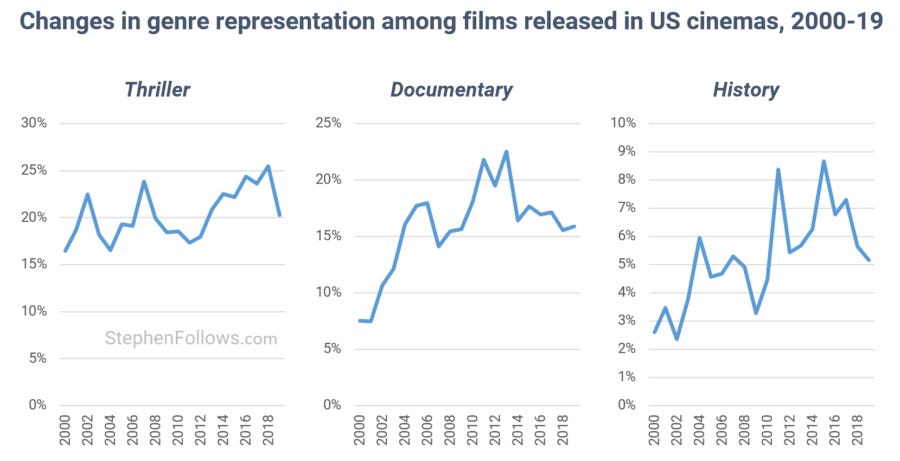

The first relates to what we've already seen, namely differing genre tastes. Three of the four most divisive genres have all seen a relative increase over the period (with the fourth, Action, neither increasing nor declining). This means that a larger percentage of the films in cinemas are within genres audiences and critics often disagree about.

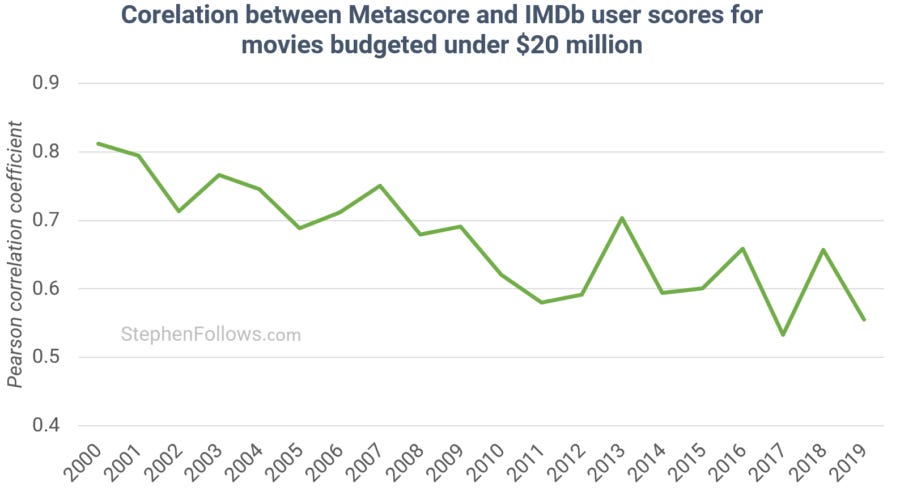

The second factor is budget. As making and releasing movies has become easier, we have seen an increase in the number of low budget films hitting the big screen. At the same time, these films have become increasingly more divisive, although I'm not sure why.

In short, critics and audience are disagreeing more about low budget films, all while a greater number of low budget films are opening in cinemas.

This gives us a (mostly) pleasing result. We know that the types of movies being released are changing and that they happen to relate to the types of films the two groups disagree about most.

The only unresolved piece of the puzzle is pondering why audiences and critics are moving further apart on the topic of low budget movies. Any suggestions would be welcomed in the comments below.

Notes

The data for today’s research came from Metacritic and IMDb. The criteria for inclusion into the dataset was all feature films released in North America between 1st January 2000 and 31st December 2019, which had a Metascore, at least 10 professional film reviews, at least 300 user votes from male IMDb users and at least 300 votes from female IMDb users. I looked at the gender of the critics and audience votes but found no link to de-synchronization.