Script readers are powerful gatekeepers. They read and rate scripts on behalf of producers, studios and competitions, meaning that what they think of a script is critical.

Scoring well with readers could lead to your screenplay reaching the desks of the great and the good (who are hopefully also the rich and the powerful). Scoring poorly could mean that all the countless hours you put into your screenplay will just have been “character building”.

Script readers' work is conducted in private and their feedback is rarely shared, even with the screenwriters they are rating. This means there is very little empirical research into what readers think a good script looks like.

Given the critical role they play in filtering scripts, this lack of data is a severe handicap for any aspiring screenwriter.

To tackle this, I partnered with ScreenCraft to crunch data on over 12,000 unproduced feature film screenplays and the scores they received from professional script readers.

The final results reveal patterns in how script readers rate scripts, and what you should avoid if you're looking to maximise your script's scores.

You can read the full details of what we found via the free 67-page PDF report. This article summarises some of the key points, although it can only scratch the surface, so for the full picture, I recommend you download the report.

Click here to download the full report

The article below pulls out eight of the most practical findings for screenwriters looking to maximise the scores they receive from professional script readers.

Tip 1: Know thy genre

The script readers in the research dataset were asked to provide scores for a variety of specific factors such as plot, tone and concept. I used this to track how important each of these factors were in the success of scripts. The higher the number, the greater the level of correlation between that factor and the script’s overall Review Score.

The biggest correlations for success are within the subcategories of characterization, plot and style. Among the least important factors are formatting, originality and the script’s hook.

The chart above shows the data for all scripts in our dataset, but there were differences between genres. There are charts for eleven genres in the full report (pages 11-16) but to give you a sense of what I mean, here is the one for Family scripts, a genre which places the highest premium on catharsis.

Tip 2: If you’re happy and you know it, redraft your script.

I measured the average sentiment of each script and provided an average value of between minus one (i.e. entirely negative) and one (i.e. entirely positive). A value of zero would indicate that the script contained an equal number of positive and negative elements.

Drama and Thriller scripts have the strongest negative connection between their average sentiment value and Review Score. Dramas with a sentiment value of between 0.20 and 0.25 receive an average score of 4.68 out of 10, whereas much more negative films (i.e. those with a sentiment value between -0.20 and -0.15) received an average score of 5.85.

My reading of these findings is that film is about conflict and drama. For almost all genres, the happier the scripts were, the worse they performed. The one notable exception was Comedy, where the reverse is true.

Tip 3: Some stories work better than others.

Using sentiment analysis, the vast majority of scripts can be grouped into one of six basic emotional plot arcs. It's hard to summarise such a complex topic in this short article so I suggest you refer to the full report (pages 19-27) but to give you a flavour of what I found, below is the chart for Fantasy scripts.

Fantasy scripts which use a 'Rags to Riches' arc (where average sentiment rises as the script progresses) perform much more poorly than those using a Cinderella arc (where the sentiment rises, falls and then rises again).

Tip 4: Swearing is big and it is clever.

There is a positive correlation between the level of swearing in a script and how well it scored, for all but the sweariest screenplays.

Tip 5: It’s not about length, it’s what you do with it.

The exact length doesn’t matter too much, so long as your script is between 90 and 130 pages. Outside of those approximate boundaries scores drop precipitously.

Tip 6: Don’t rush your script for a competition.

The majority of the scripts in the dataset were submitted to script readers as part of a screenplay competition. When I looked at the delay between when a script was last saved and how it performed, I found a fascinating correlation - the closer to the deadline a script was finished, the worse it performed.

I interpret this to mean that if you're rushing a script for a deadline then you're not going have spent enough time re-drafting. Conversely, it's not surprising that scripts which had ample time to be improved and tweaked perform better.

Tip 7: VO is A-OK.

Some in the industry believe that frequent use of voiceover is an indicator of a bad movie, however I found no such correlation. May I politely suggest that any complaints on the topic should be sent to editors, rather than writers.

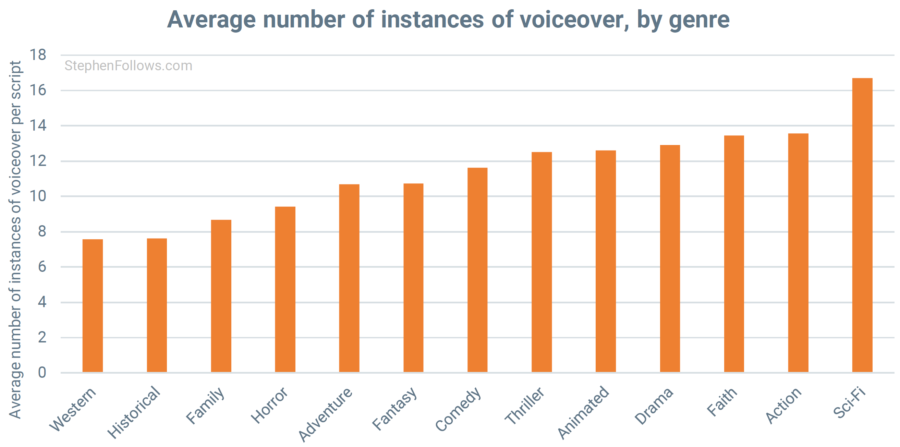

Voiceover is much more likely to be used in Action and Sci-Fi scripts than in Westerns or Historical scripts.

Tip 8: Don’t worry if you’re underrepresented within your genre – it’s your superpower.

Gender is a complicated topic and I have put far more detail on this topic and our methods in the report (pages 61-62). For this article, I will just share an interesting finding relating to how the scores differed by gender and genre.

Female writers outperform male writers in male-dominated genres (such as Action) and the reverse is true in female-dominated genres (such as Family).

My reading is that when it's harder to write a certain genre (either due to internal barriers like conventions or external barriers like prejudice) the writers who make it through are, by definition, the most tenacious and dedicated. This means that in a genre where there are few women (such as Action) the writers that are there tend to be better than the average man in the same genre.

Bonus Tip: 'Final Draft' writers outperform writers using other software

There is a correlation between the quality of a script and the screenwriting software used to write it. Scripts written in Final Draft performed the best (an average score of 5.3 out of 10) whereas scripts written in Celtx performed much worse (an average of 4.7)

It should be noted that I'm not suggesting that the programs are affecting the art. There are likely to be a number of factors contributing to this, not least the fact that Celtx is free to use, meaning that more early-stage writers use it than its paid competitors.

Notes

This project is not about measuring art or rating how good a story is; it’s about decoding the industry’s gatekeepers. Rather than suggesting “this is what a good script contains,” we are saying “this is what readers think a good script contains”.

In the real world, this distinction may not matter as readers are an integral part of the industry’s vetting process. But it is important to remember that all the advice to screenwriters in this article and the full report is in relation to the data and through the lens of what script readers have revealed in their scores.

The most talented writers can overcome most, if not all, of these correlations. They can make the impossible possible, spin an old tale a new way, induce real tears over imagined events and lead us to root for characters we know to be doomed.

Privacy was something we took seriously throughout this project. ScreenCraft provided the score data in an anonymised form and they did not provide data which would have provided deeper insights at the cost of reasonable privacy. We fully support their efforts to balance educational research aimed at helping screenwriters with protecting the privacy of the writers involved.

Epilogue

I am very grateful to ScreenCraft for partnering with me on this research. It simply would not have been possible without their data, their trust and all the help and advice along the way.

I was ably assisted in the research by Josh Cockcroft and Liora Michlin. Their input was vital to make sense of such a large dataset of scripts and scores, as well as being able to present it in a digestible manner for screenwriters.

This research was funded by proceeds from my last major project, The Horror Report. The Horror Report was published via a ‘Pay What You Can’ model, with all the income going to support future film data research.

I am very grateful to everyone who purchased a copy and especially to the generous people who chose to give more than the minimum. The script readers research simply would not have been possible without such contributions. Thank you.

Next week I'm going to share another aspect of this research - details of what the average screenplay looks like.