The data behind terrible, terrible movies

Last week, I used the release of Robin Hood as a catalyst for an article about box office flops. Normally, I don't like to single films out for undue criticism but sometimes it can't be avoided. I'll try and be more restrained in future articles.

This week, I'm turning to the completely different topic of terrible, terrible movies - such as the recent release of Robin Hood. The film has received an average score of 32 out of 100 from film critics (just 15% of reviewers gave it a positive review) and it has an IMDb score of 5.3 out of 10. Also, I saw it and I want my time back.

To try and make lemonade out of this lemon, I decided to take a look at the very worst movies in US cinemas over the last three decades - turkeys.

We're going on a turkey hunt

We need to start by fine-tuning our turkey detectors:

Metacritic's Metascore. This is a weighted average of all top movie critic reviews collected by Metacritic and is expressed as a value out of 100.

IMDb's user scores. All registered IMDb voters can rate movies out of 10 and IMDb uses a weighted algorithm to generate a single score for each movie, to one decimal place. For today's project, I only included IMDb scores when there were at least 500 votes, to avoid vote-stuffing.

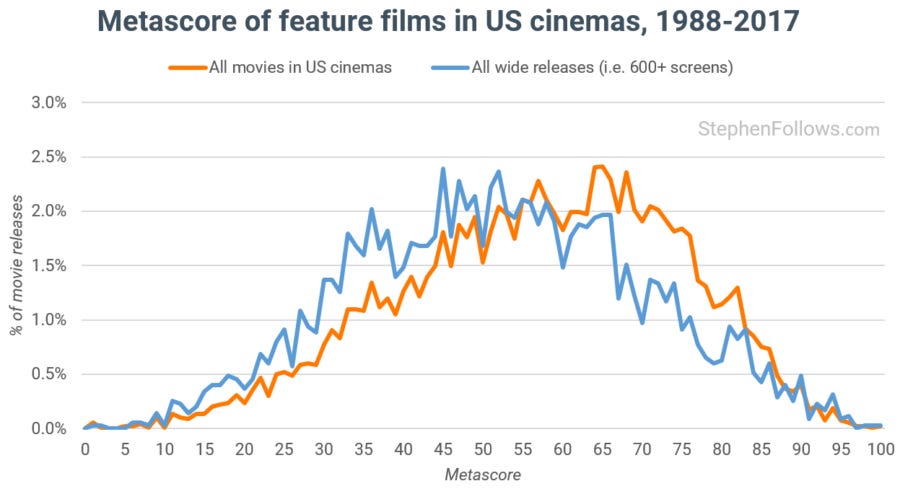

The average Metascore for all movies in US cinemas over the past thirty years is 54.2 out of 100. As well as looking at all movies on release, we need to look at those which received a wide release (i.e. opening on at least 600 US cinemas). This is because I think the visibility of a movie should play into our calculation of which are the biggest turkeys. A wide release and big marketing campaign will give a terrible movie a greater impact on the perception of the industry than a terrible indie release which briefly limps out to cinemas.

Put another way, if a bad movie flops in the sticks and there's no-one there to watch it, does it really matter?

When we focus just on wide releases, the Metascore average drastically drops to just 38.1. This could be for a number of reasons, although the three which seem most likely to me are:

Hollywood doesn't care. For many types of studio movies, what critics think of a movie is not the most important factor for success. Having an established fan base and spending lots on marketing can often trump receiving good reviews.

Selection bias. In order to get a Metascore, a movie needs to be selected for a theatrical release by a distributor and reviewed by at least four of Metacritic's chosen reviewers. The vast majority of wide releases already have a distributor as they're released by the studio which made them, whereas indie movies have to attract a third party distributor.

Critical snobbery. It's certainly possible that film reviewers judge big-budget Hollywood movies more harshly due to their penchant for more "sophisticated" fare.

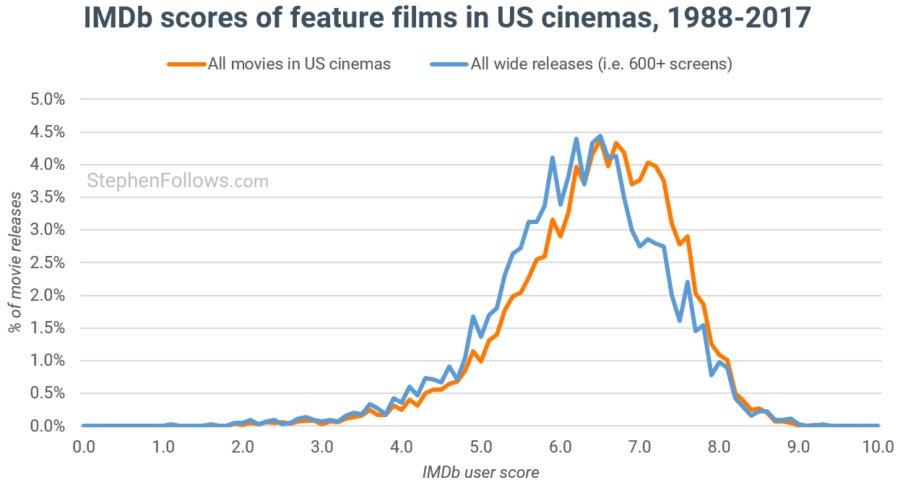

IMDb scores see a similar pattern, although the spread is much more narrow. 85% of movies received between 5.0 and 8.0, whereas only 46% of movies received a Metascore of between 50 and 80. We see the same drop in average score for wide releases, with all movies receiving an average of 6.4 out of 10 while the 'wide' average was just 4.8.

We're gonna catch a big one

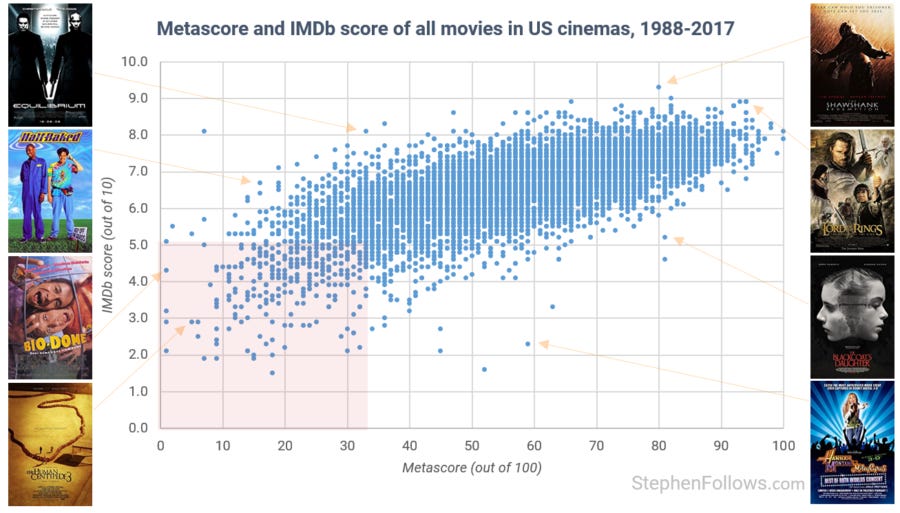

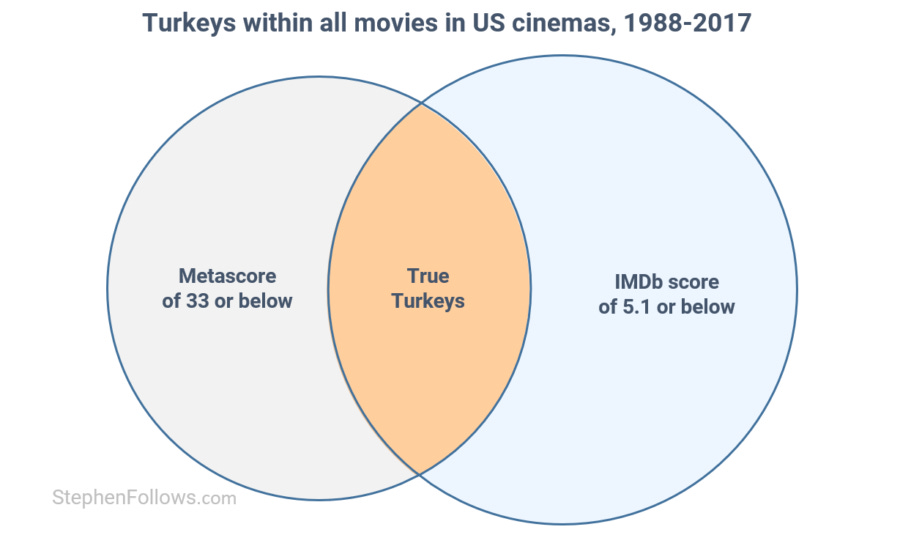

Using the data gathered above, I have decided to define a 'Turkey' as a movie which received a Metascore of 33 or lower and an IMDb score of 5.1 or lower. Both of these measures were derived by finding the bar which 90% of all movie releases would clear, thereby giving us the worst 10% by both measures.

The chart below shows the Metascores and IMDb scores of all films released in US cinemas in the past thirty years. The red shaded area on the bottom left is where our turkeys live.

By this measure, 2.6% of all released movies are turkeys.

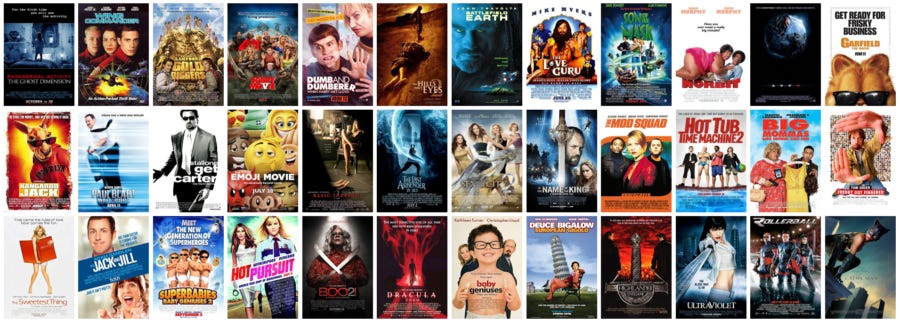

Without wanting to turn this into a BuzzFeed quiz, how many of the following 36 turkeys have you seen? (Or worse, paid to see?) My number is higher than I'm willing to admit...

I'm not scared

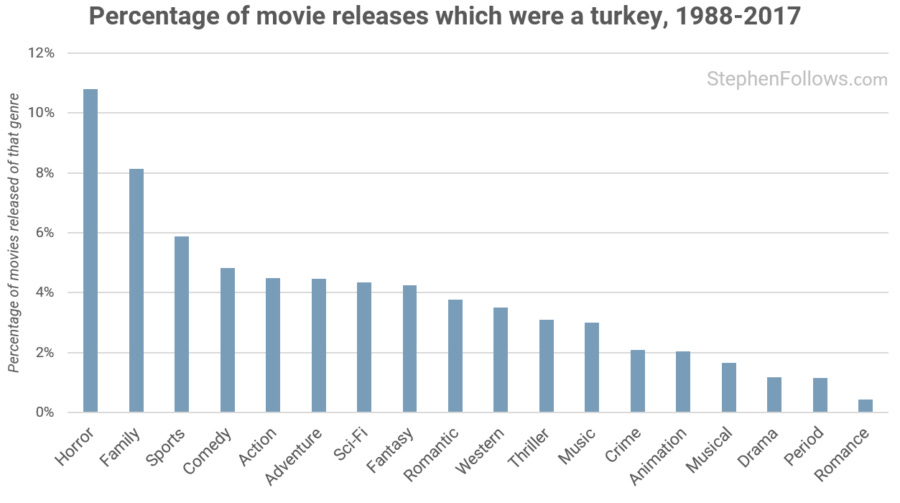

Now we have our turkeys in sight, we can size them up. Over 10% of Horror releases fall into my turkey crosshair, followed by Family movies. It could be argued that these two genres have among the most quality-resilient audiences. In the past, I've shown how the correlation between reviews and profitability for Horror movies is extremely low and I imagine that audiences for Family movies have more concerns than "Is it going to be the best film I can see?"

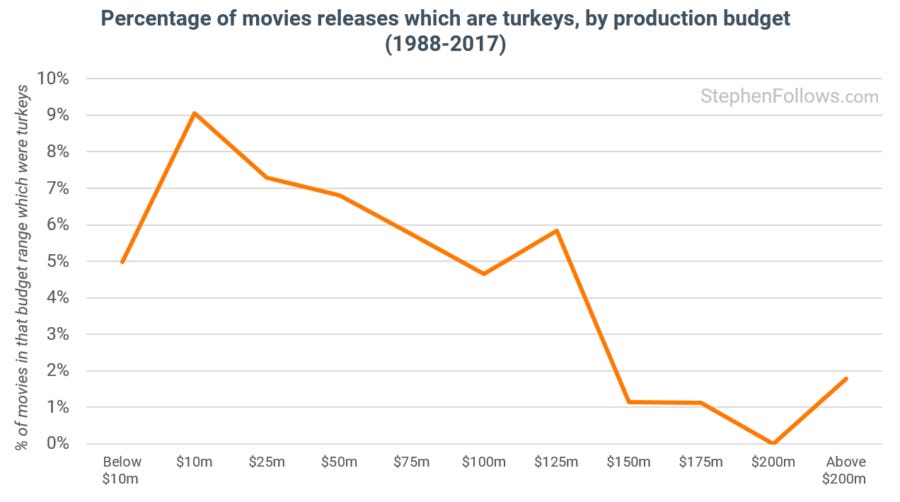

Earlier on, I touched on the fact that some movies are far more visible than others, such as those on wide release, and that this colours our preconceptions about movies. There is no better example of this than when we split our turkeys down by budget.

I would have thought most readers will be expecting me to say that as budgets rise, so does the turkey frequency. Not so. In fact, it's the reverse.

Movies on the lower end of the budget spectrum are much more likely to be turkeys than those on bigger budgets. How does this square with our earlier finding that reviews are usually lower for wide releases? It's about extremes. Hollywood may not be very adept at creating great films, but it is quite good at avoiding terrible films. Marketing can turn a somewhat-bad film into a hit (*cough* Jurassic World *cough*) but they can't do much with a downright stinker.

What a beautiful day (or month)

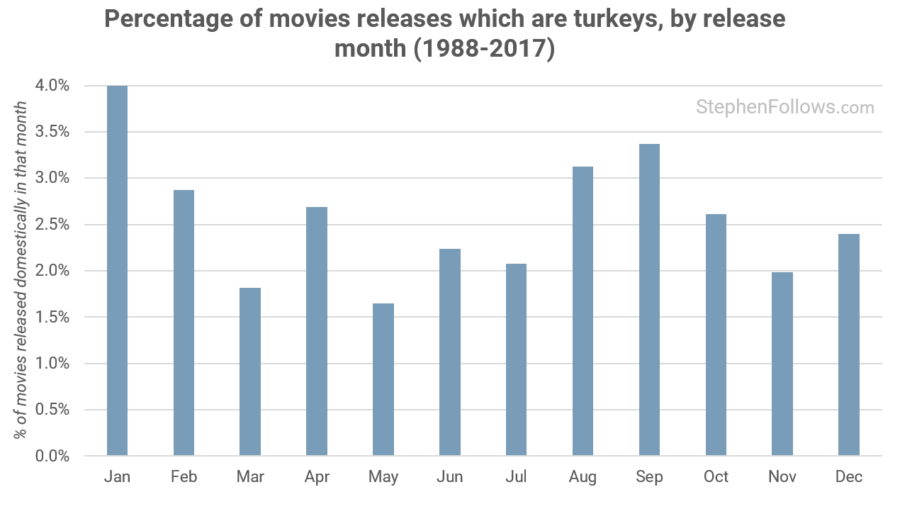

Finally, let's take a look at when turkey season is. If you're allergic to turkeys, may I suggest visiting the cinema more often in May (when 1.7% of releases have historically been turkeys) and less so in January (4.0%).

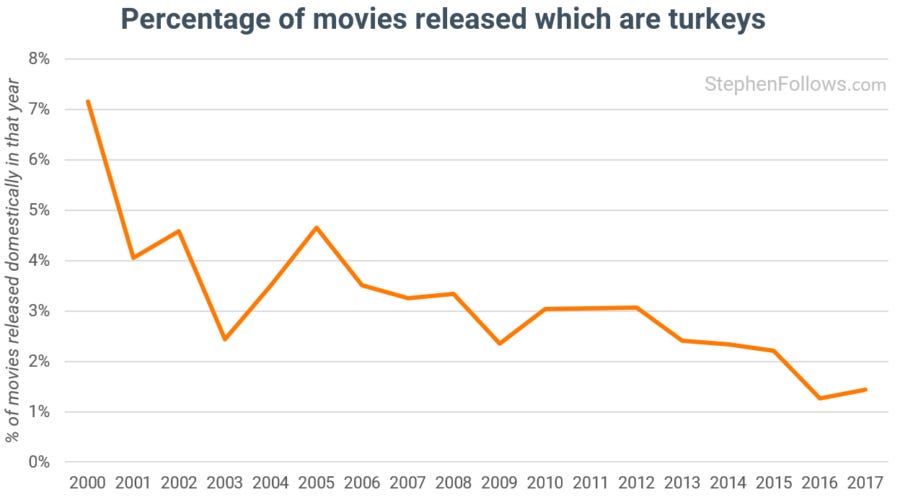

Finally, let's end on a positive note. Hollywood appears to be getting much better at avoiding the very worst kind of movies. Turkey sightings have been dropping over the past few decades and are much lower than at the turn of the century.

Notes

The data for today's research came from IMDb, Wikipedia, Box Office Mojo and The Numbers / Opus. Genre classifications are those of Box Office Mojo and all release dates are the US theatrical dates.

Epilogue

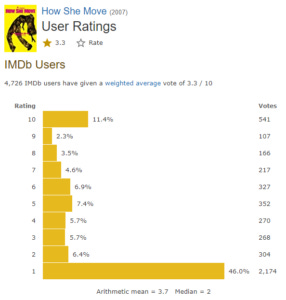

The IMDb user score rating system is a pretty good measure when we're looking at this many films. However, there are instances where it fails. Their secret algorithm is designed to minimise the impact of vote-stuffing, either by people looking to raise or lower the score for a particular film. Along the way, I have found some films where the votes seem suspicious. We can't know the full story from the outside but we can observe patterns. A typical voting pattern is that of a bell curve, where the number of votes cast rise up to a point and back down again.

One example of a film which appears to have been the result of some suspicious vote-stuffing is the music-based drama How She Move. The film's Metascore is 62 but its IMDb score languishes at 3.3. Almost half of all the votes cast for that film gave it the lowest possible score of one out of ten. This seems strange as even truly terrible movies tend to have a more even spread of votes (i.e. Movie 43 has an overall IMDb score of 4.3 and under a fifth of its votes were one star).

I have actually conducted a lot of research into the mechanics of the IMDb score algorithm and built up a database of highly suspicious films. In some cases, all of the movies starring certain actors and actresses appear to have been manipulated to increase their scores. I don't believe this is IMDb's doing (from what I can see they work to actively prevent exactly this) but rather someone connected to the movies or their stars. In the end, I decided not to publish what I found, for a few reasons. It involved revealing a little too much about how to manipulate the IMDb system (which wouldn't be good for the industry) and it also didn't have any purpose other than to castigate or shame those involved (some of whom may have been unaware of the fraud they benefit from). I did email about 100 people who had suspicious patterns on the majority of their movies. I pointed them out and offered them anonymity in return for a chat about their experiences with the system. Strangely enough, none of those 100 people replied!