What types of films are critic-proof?

I crunched the numbers on 8,190 movies to discover which types stay profitable even when the critics are unkind.

Five Nights at Freddy’s 2 has just been released, and, at the time of writing, it appears that two things are true:

The critics hate it. Currently, only 12% of the 81 reviews on Rotten Tomatoes are positive.

That hate won’t prevent it making money. It’s opened at number 1 at the Domestic box office, collecting over $60 million in its opening weekend, on a budget of roughly half that.

This got me thinking: what types of films show the least correlation between profitability and critics’ opinions?

Regular readers will know that I recently published a report on movie profitability connections, and so I already have the data to research this. (You can read more on that report at GreenlightSignals.com).

I examined the financial performance of 8,190 movies, focusing on those that received poor critical reception, which I defined as scoring under 49 out of 100 on Metacritic.

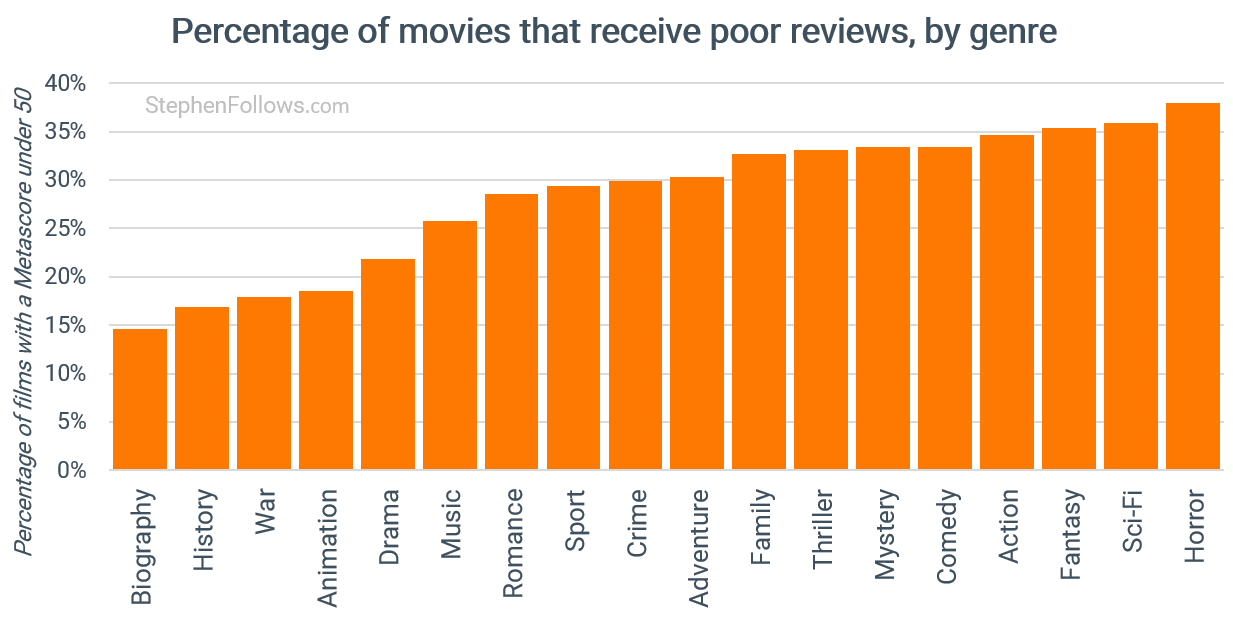

What kinds of movies do critics dislike?

The first thing to note is that critics do show repeated patterns in the kinds of films they like and dislike.

In today’s article, I’m not going to go into whether the films are actually good or bad artistically (i.e., whether the critics are correct and/or biased); I’m just going to state the fact that critics did or did not like them. That’s a debate for another day.

Across the dataset, critics rarely give poor reviews of biographies, historical films, and war films. This is obviously a highly interconnected set of films, as films can have multiple genres applied, and there is a lot of overlap among those classifications.

At the other end of the spectrum, we have genres they frequently review poorly, namely horror, sci-fi and fantasy films. In fact, we could group all the films at this end as ‘spectacle films’, whereas the critics’ favourites tend to be emotional and cerebral.

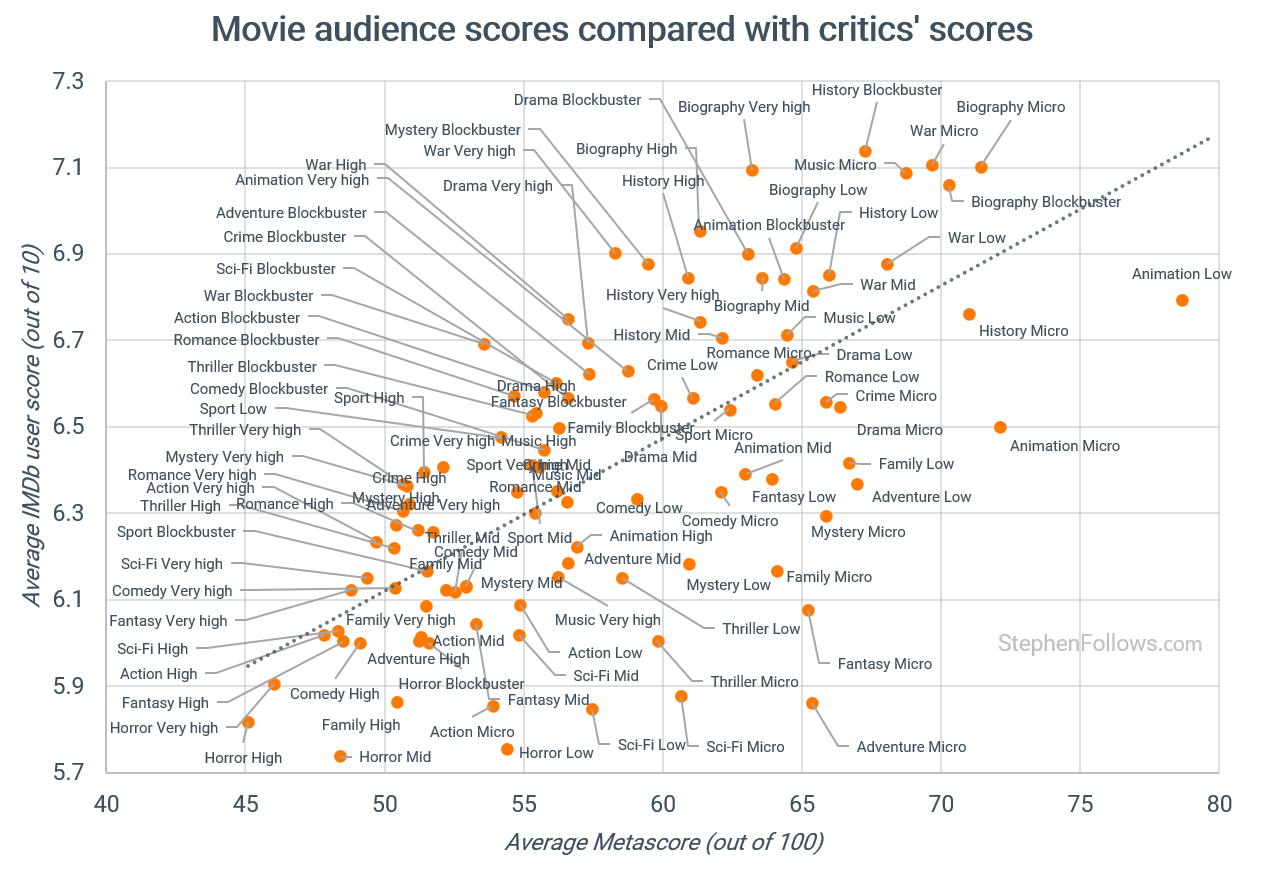

Does the audience agree?

When we bring in audience scores (presented here by the IMDb user score), we can see that while audiences and critics do broadly agree, there are many cases of misalignment.

Overall, the correlation between the two sets of scores is 0.66, where 1 would be a perfect correlation and 0 would be no correlation at all.

These scores tell us what critics and audiences thought of the films, but they do not tell us how much any of this matters commercially. So let’s pull in profitability data and see what we can discover.

What’s the connection between critics’ scores and profitability?

As any film fan is aware, a movie can be poorly received yet still make money, and a film with glowing reviews can still struggle.

Side note: While writing the paragraph above, I subconsciously switched between using the words “movie” and “film” mid-sentence. It made me think of the research I did into the connotations between the two word choices: Which types of feature films are called ‘films’, and which are called ‘movies’?. Anyway, back to our main mission…

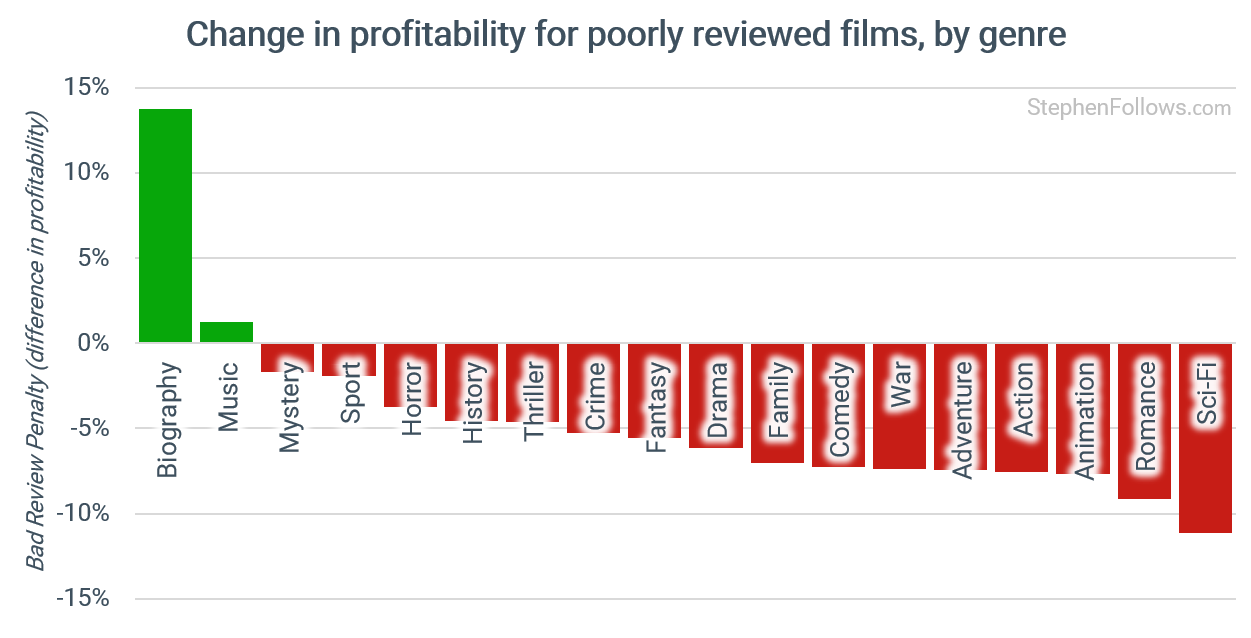

To understand which types of films are least affected by weak reviews, I created the “Bad Review Penalty”, which is a simple measure of the connection between critical reception and profitability.

It compares two proportions within each film group. The first is the share of all films in that group that were profitable. The second is the share that were profitable despite receiving weak reviews, which I defined as a Metascore of 49 or below. The difference between these two proportions is the Bad Review Penalty.

For example, if 40% of all sci-fi films made a profit but only 28% of the poorly reviewed titles did, the Bad Review Penalty would be -12 points.

A small value means profitability is much the same whether the reviews are flattering or not.

A larger negative value means the group becomes less profitable when reviews are weak.

A positive value would suggest the category is largely insulated from critical opinion.

Using this measure, we find that some genres look reasonably stable when viewed in isolation.

Animation, Sci-fi and Romance are the most negatively affected by bad reviews.

Horror, sport and mystery films show only small shifts in average profitability when reviews are weak. At first glance, this suggests a degree of insulation. In practice, much of this stability comes from the large number of smaller projects within these genres. (More on the effect of budget scale in a moment).

Music films and biographies sit apart from the rest of the dataset. Both tend to attract audiences who are already interested in the subject, whether that is a musician, a public figure or a real event. Their commercial performance relies more on existing curiosity than on critical approval, which explains why these categories display a small positive Bad Review Penalty.

Genre gives us an initial sense of where reviews matter, but it does not explain the full pattern. This is something that matters way more than genre alone.