A few days ago, Disney revealed that they have developed AI technology which can read the faces of audiences to track how they are experiencing a movie, second by second. This shouldn’t come as much of a surprise; Hollywood has long relied on test audiences to shape their movies and most modern smartphones have cameras which can locate and track human faces.

A few days ago, Disney revealed that they have developed AI technology which can read the faces of audiences to track how they are experiencing a movie, second by second. This shouldn’t come as much of a surprise; Hollywood has long relied on test audiences to shape their movies and most modern smartphones have cameras which can locate and track human faces.

Despite this, the reality that this is in current usage has become a big talking point in the industry. Views vary from joy at being able to finally get reliable audience data to fear of how much this may embolden already-meddlesome studios to override the wishes of artists and auteurs.

Regardless of where you stand on the issue, it’s certainly an interesting development. Disney has a slightly bigger budget than I do on this blog, but I thought it would be fun to play around with a few ideas in the same field. First up, let’s see what emotions are displayed on the faces of actors on movie posters.

I collected movie posters for all movies which grossed at least one dollar in US cinemas 2000-16 and ran them through Microsoft’s Azure Emotion engine. This tool can detect faces and measure levels of different key emotions (anger, contempt, disgust, fear, happiness, sadness and surprise). There’s more detail on my methods at the bottom of the article, including a link to where you can try the tool for yourself.

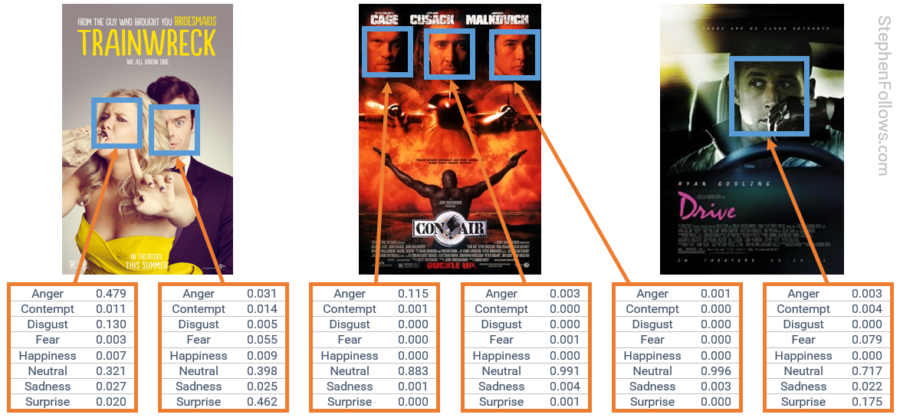

How does the system work?

Microsoft’s Azure Emotion engine scans an image and identifies all of the faces. It then compares these faces to patterns it has learned of how humans display certain emotions. Finally, it outputs a value for each emotion. Below are a few examples:

Let’s take a look at each of the seven emotions in turn.

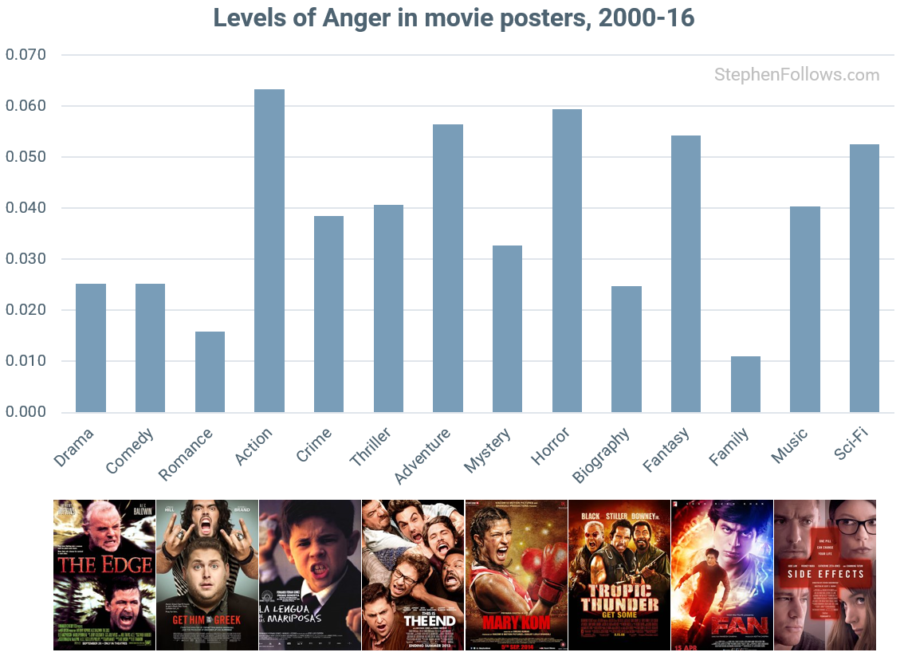

Anger in movie posters

Action movies have the angriest looking actors on their posters, followed closely by Horror, Adventure and Fantasy movies.

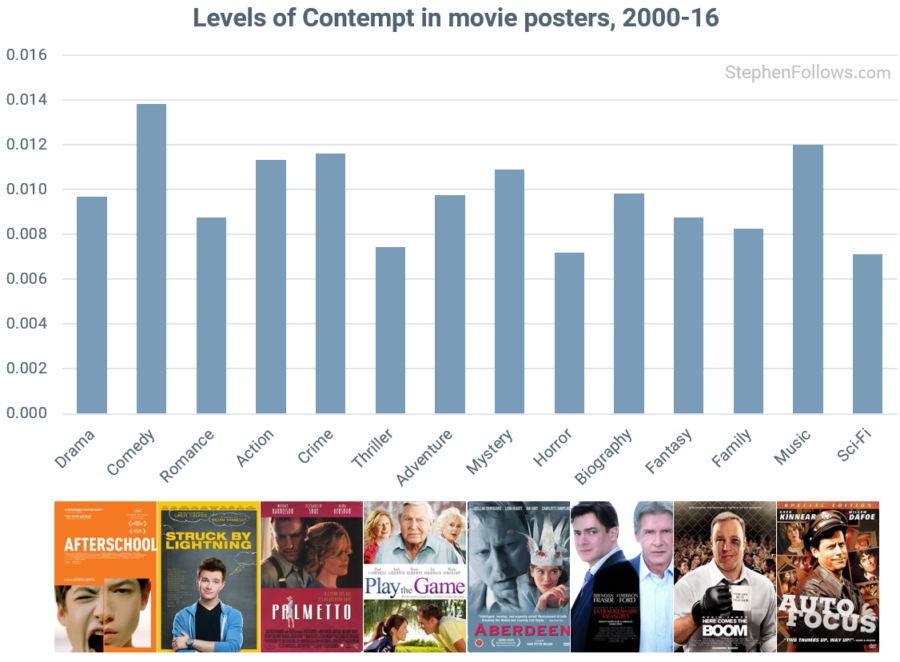

Contempt in movie posters

Contempt is most frequently used on comedy posters and, perhaps surprisingly, least on Thriller, Sci-Fi and Horror movies.

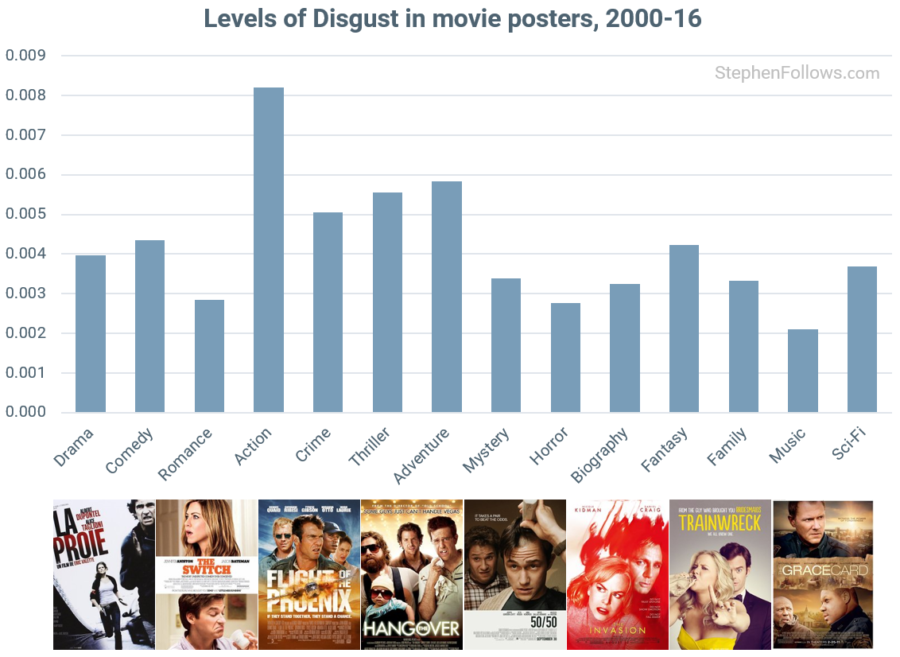

Disgust in movie posters

Levels of disgust are similar to those of anger, with Action movies taking the disgusting lead.

An interesting pattern is emerging between Action movies and Music-based films. They both have relatively high levels of both anger and contempt, but while Action films have high levels of disgust, Music films do not. Maybe the bad guys musicians have to deal with wash more often than those faced by ass-kickers?

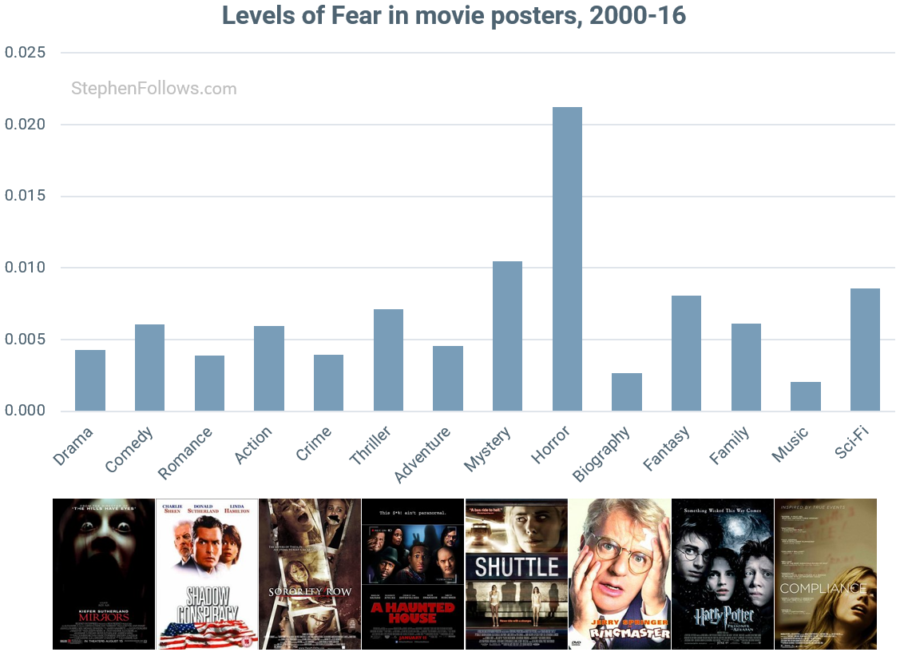

Fear in movie posters

Horror movies dominate the fear category to a scary degree, followed by Mystery, Sci-Fi and Fantasy movies.

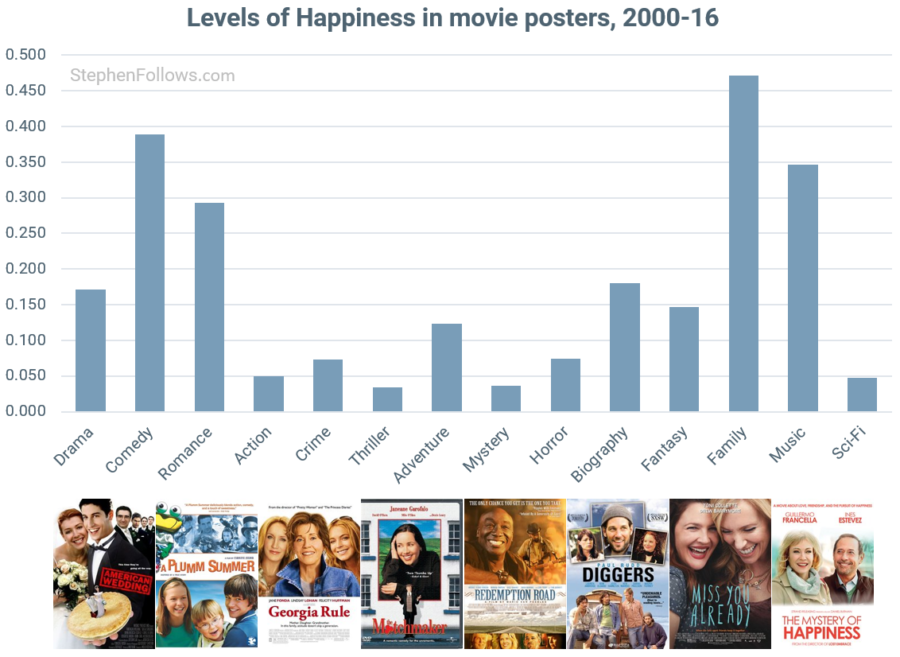

Happiness in movie posters

The happiest actors feature on Family and Comedy movies, followed by Music-based films. Maybe it’s my ‘Well-Groomed Musical Bad Guys’ theory at play again.

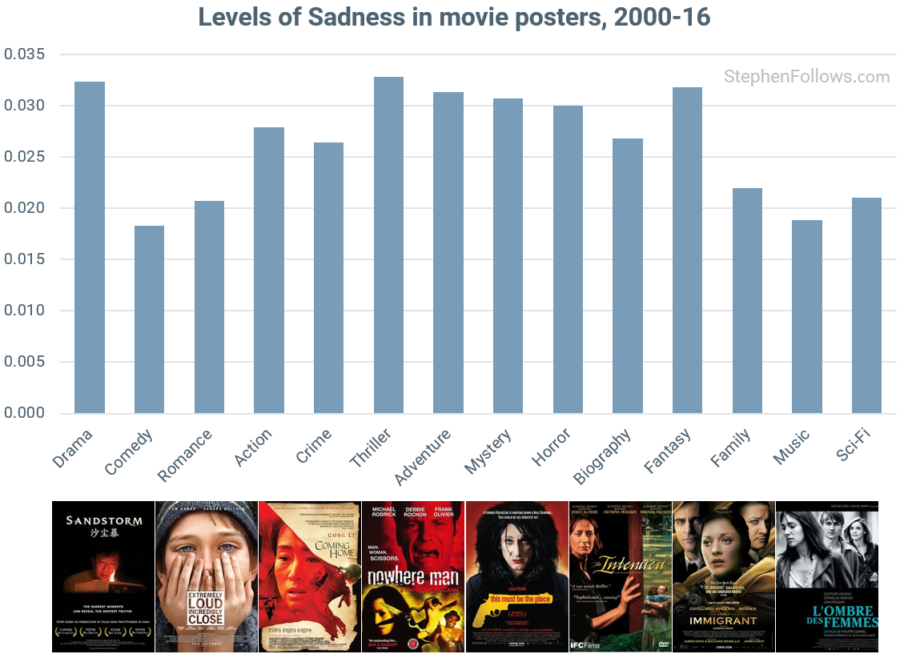

Sadness in movie posters

Thrillers take the award for “Movies Posters featuring the Saddest Actors”, beating Dramas and Fantasy films by the smallest of margins.

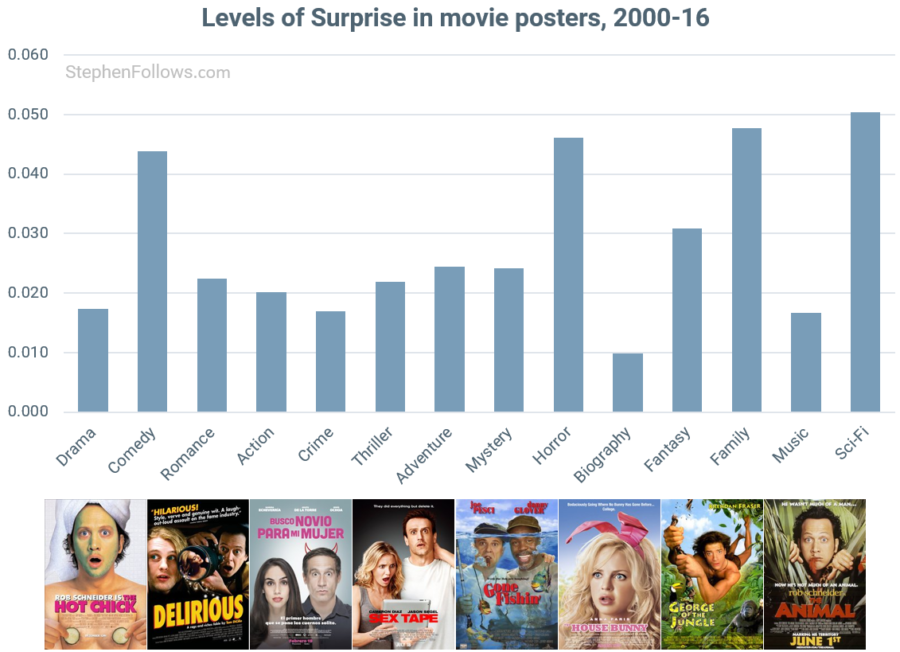

Surprise in movie posters

Sci-Fi movie posters expressed the greatest levels of surprise, presumably at all the flying cars and such.

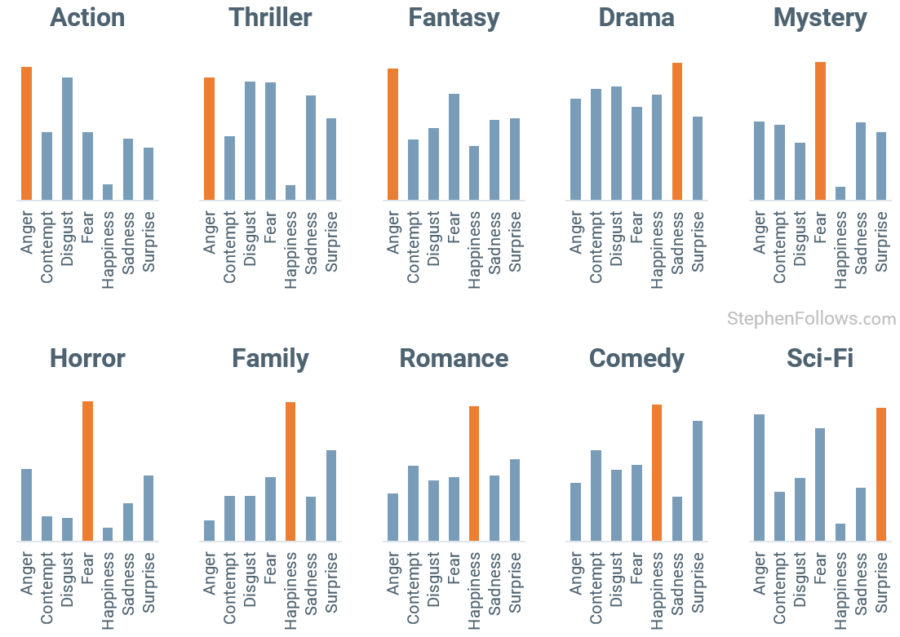

The main emotion by genre

To finish this piece off, I thought it would be fun to present the data another way. Namely, to look at what is the most significant emotion shown on movie posters for each major genre.

To do this I cannot just use the raw data provided by the Azure Emotion machine as some emotions are more subtle than others (i.e. when happiness is on display it is often a lot more pronounced than when disgust is showing) and because some emotions are much rarer than others (across the dataset, there was over eighteen times as much happiness as there was disgust).

Therefore, the charts below show the emotions which are disproportionally found within each genre, compared to the overall average. For example, Horror movies had way over the average level of fear, and below average for all other emotions. I have highlighted the emotion which was most disproportionally present in orange for easy identification.

Methodology and Notes

My methodology was as follows: (pun very much intended)

Collect movie poster images. I collected a movie poster for each of the 7,617 movies which grossed at least one dollar at the US theatrical box office between 1st January 2000 and 31st December 2016. I used IMDb, Wikipedia, and Google Images as sources for the poster images. It’s not uncommon for movies to have multiple posters and variations, to accommodate different usages and location, so I aimed to find the most commonly used poster for each movie. Where there were different international versions, I opted for the US poster.

Collect movie poster images. I collected a movie poster for each of the 7,617 movies which grossed at least one dollar at the US theatrical box office between 1st January 2000 and 31st December 2016. I used IMDb, Wikipedia, and Google Images as sources for the poster images. It’s not uncommon for movies to have multiple posters and variations, to accommodate different usages and location, so I aimed to find the most commonly used poster for each movie. Where there were different international versions, I opted for the US poster.- Run them through Microsoft’s Azure Emotion engine, via their API. This tool identifies all faces within a poster and returns data on the location and size of the faces, along with a value for seven emotions (anger, contempt, disgust, fear, happiness, sadness and surprise) and a ‘neutral’ value. These values add up to one, although in most images the biggest value is for ‘neutral’ I choose to leave out the neutral values from this article as I didn’t think it added much to the piece. If you’re interested, the genres with the highest neutral value were Mystery and Thriller, and the ones with the lowest were Family and Comedy.

- Analyse results. Finally, by using metadata on the movies from my previous datasets, I was able to look at how the values differed between genres. My focus today has been on comparing the levels between genres, rather than measuring the absolute levels of each emotion.

Not all movie posters have faces in them, and the Azure tool sometimes struggles if some parts of a face is obscured.

Not all movie posters have faces in them, and the Azure tool sometimes struggles if some parts of a face is obscured.

For example, most humans would say that the poster for Carol was made up of two faces, but the Azure engine cannot spot either. Therefore, my final dataset of emotions was comprised of 3,035 movie posters (i.e. posters which had faces which the Azure engine could detect emotions).

You can test the Azure Emotion engine for yourself by going to azure.microsoft.com/en-gb/services/cognitive-services/emotion and uploading an image.

Epilogue

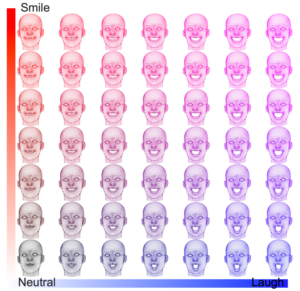

The news which sparked this topic off was the unveiling of Disney’s collaboration with Caltech in tracking real-time audience reactions. The project is called ‘Factorized Variational Autoencoders‘ (FVAEs) and they describe it like this:

The news which sparked this topic off was the unveiling of Disney’s collaboration with Caltech in tracking real-time audience reactions. The project is called ‘Factorized Variational Autoencoders‘ (FVAEs) and they describe it like this:

The factorized variational autoencoder takes images of the faces of people watching movies and breaks them down into a series of numbers representing specific features: one number for how much a face is smiling, another for how wide open the eyes are, etc. Metadata then allow the algorithm to connect those numbers with other relevant bits of data—for example, with other images of the same face taken at different points in time, or of other faces at the same point in time.

The system is so effective that after only a few minutes, the system can predict how each audience member will respond to the rest of the movie. You can read their scientific paper on the project here.

This system is an inevitable consequence of new technology meeting old desires; Hollywood has been testing its products on audiences for almost as long as they’ve been making movies. In 1939, an early test audience suggested that MGM should remove ‘Over the Rainbow’ from ‘The Wizard of Oz’ as it was slowing the movie down. Den of Geek has a great article on the topic entitled “51 films, and how they were affected by test screenings“.

If you’re unsure of how you feel about these developments, take a picture of how you looked when you heard the news and feed it into the Azure Emotion machine. Then you’ll know your exact level of surprise, fear, disgust…

Comments

Hello,

Nice work!

Is the data table you computed with 7,617 movie names x 7 emotions values publicly available? I would like to use it to get students hands-on for my lecture on data viz.

Thank you